Building Virtual Worlds

( 2016@CMU Entertainment Technology Center )

I worked as an interaction designer with engineers in a 4-5 ppl agile teams. Through rapid prototyping, explored the best practice of user interaction in mix-reality platforms. Ideated, researched designed and implemented multiple engaging user experiences

I led the 2D&3D visual design and interaction flow, creating 3D and 2D UI including environments, characters, and animations to tell compelling game stories.

Platform: Leap Motion + Oculus, HTC Vive, Eye-tracker, Kinect, Cave, Makey-Makey

Software: Maya (3D UI model, rigging, animation), Unity, Photoshop, Sketch (2D UI), Premiere, After Effect

KUNGFU MONK VR FESTIVAL 2016

In this gaming experience, we explored the moving and dodging aspect in VR space. Using HTC VIVE, our guests will walk into an ancient east environment surrounding by traditional music and architectures. They will be able to hold the swords hitting the flying darts, moving around in the square platform to avoid fire, and forming a Tai-chi shape to become the Kungfu master.

Role: Lead 2D&3D Interaction Designer in a team of 5

Project Info: 15 days, 5 team member Platform: HTC VIVE + Unity

3D Interface

Compare to traditional screen experience, we found that in VR space, designing user interface was like designing the environment. We thought about using various ways to integrate the mechanics into the 3D objects. Combining cinematic opening and staging, we wanted to give the user immersive experiences without noticing the boundary of the VIVE space.

|  |  |

|---|---|---|

|  |  |

Hitting

Winning

Losing

Giving out darts

Winning dance

Woodenman

The CHARACTERS

In the VR environment, the guests sometimes confused about their identity. At the first stage, I just use the master interaction. And then we decided to have other monks in the beginning as well as the winning and losing stage to make guests feel more into the scene.

PLAYTEST

The original design was to using multi-detections on multi-platforms (Kinnect+HTC VIVE). VIVE detects the positions and Kinnect will monitor the foot move. However, in the play-testing, we realized that limited obstacles will give the guest more space to strategize, so we simplified it with different waves of obstacles that guests need to hit or doge.

LEARNINGS

-

Tech: This is a game prototype in a short time frame. We were too ambitious to using different platforms to implement a 360 game experience. We realized there were technical constraints and decided to focus on HTC VIVE for more stable gameplay.

-

UX: It was imperative to lead the user, but we need to do in an indirect way so that the user can emerging into the environment without introducing too much mechanics.

-

3D vs 2D interaction: It is necessary to have some 2D elements in a 3D environment that fixed with the headset angle. It will help the user be clear about which stage they were at instead of searching for the next step.

-

Production: To implement a game in a short time frame, it is useful for the developer and artist to start at the same time after the user experience was defined. The assets can be added to unity after the basic framework was there to save time for waiting.

INTOUCH VR MULTIPLAYER 2017

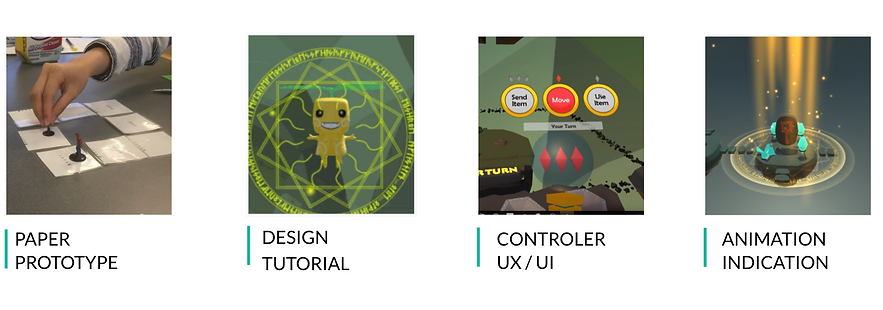

In this experience, we tried to transfer tabletop game elements into VR by developing a 2-player game experience using Oculus Rift/Touch. We first tried paper prototyping, and then imported the mechanics into virtual reality. I was the lead interaction designer for creating tutorials that helped the user understand the complexity of the game and also introduced the mechanics of the controller.

Role: 3D Interaction Designer + 2D concept artist in a team of 6

Project Length: 4 months Platform: Oculus Touch + Unity

INTERACTION DESIGN

Tutorial DESign

The tutorial was a very important aspect of our experience because of the mechanics. By implementing the onboarding experiences, I also helped to connect the dots of each of the steps, stages, and scenarios.

In the beginning, I designed a very thorough tutorial with a step-by-step voice guide. However, in the playtesting, we found out that it was just simply too thorough and too much to digest.

So we list all the things we need to teach and use a helper character throughout the game as an indication.

For the basic function part, I made a graphic instruction to introduce the function of buttons on the controller to help the first-time player take their time to figure it out rather than busy catching the sound instructions.

We decided to put notifications inside the game so that the player will be reminded at each turn and round with sound and visual cues to relieve the burden for the player to memorize.

So for the actual game tutorial, it has shortened significantly to three basic features that they need to navigate through the game. It now takes average 1-2min to finish the tutorial and the overall feedback from showcase day was good.

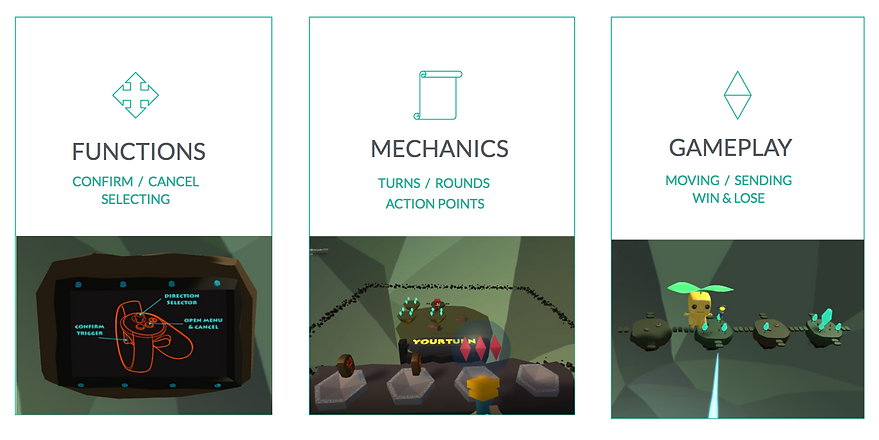

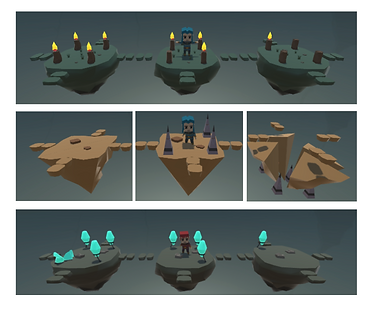

3D Interface DESign

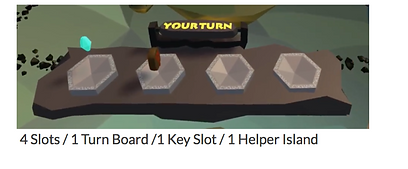

We made different concepts to represent tile life, turns, and rounds. Through playtesting we iterated on original concepts and make the gameplay between two players more lear. We also iterated the inventory to make the intention of the lots more clear.

CONTROLLER

The use of the controller is important since we have a relatively complicated mechanics. By breaking down the three key elements. We integrated the actual controller with the virtual staff. With a 2 level menu indicated by diamonds on each button, the player can see the stats of their objects.

ROUND INDICATOR

INVENTORY ITERATION

ANimation indication

We later added more visual cue in Unity. Those animations helped us:

1. Make the experience more intriguing.

2. Add more clear feedback of each action the user takes.

3. Give a clear visual hierarchy.

4. Lead the user indirectly to focus on certain elements in the current state.

Learnings

Gameplay: We set out to explore the possibility to implement a complex board game into VR space. We realized we were adding more complexity to the gameplay since it was less direct than playing in the actual space. Our learning for designing in VR was to really evaluate what's the benefit the user can gain in the VR space and if it is necessary or rewarding enough for the user to learn all the steps.

Tutorials: Contrary to my previous thoughts, the best way to approach tutorial was not to put everything together and train the user all at the beginning. Without the context, they may not remember or the mechanics. A good solution would be to teach them inside the game, as much as possible, and make the teaching, learn, reward a part of the experience to give the user positive feedback.

Visual Styles:

Visual effects helped greatly in terms of help the user understand their location, their turns, and rounds as well as the present stats. This was something they cannot get from an actual world. The space, the size of the character, and the viewpoint changed the user's perception of this virtual world. More playtesting would definitely help the final design.

TILE INDICATORS

Role: Lead 3D Interaction Designer in a team of 5

Length: 2 weeks Platform: HTC VIVE + Unity

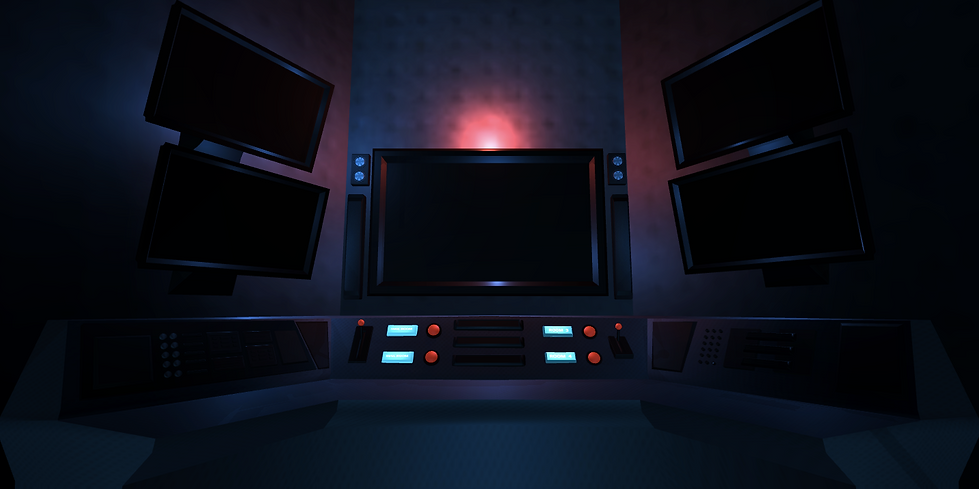

[ HTC VIVE ] Doors

In this narrative experience, we designed a control room for the user to decide the fate of the people in the security camera. We want to create this moral dilemma in this game and see if the guest will emphasize with the characters and make the decision of whom to save and whom to sacrifice.

3D Interface DESign

Role: UX Designer + 2D Artist Length: 2 weeks

Platform: Eyegazer + Unity

[ EYE-GAZER ] Save or Sushi

This is a gameplay experience created in Eyegazer. The guest needs to use their eyes to control the lovely friends to avoid falling to the knives.

Animations

[ CAVE + Makey Makey ] Marley the Plane

The irresponsible drunk captain can't drive the plane. Our two guests must help each other reading different diagrams and go through the storms by the help of the unreliable captain and the vague instructions from the radio! We made the control panels to stimulate the airplane cockpit environment. Using the cave platform to make the guests have virtual driving experiences.

|  |  |

|---|---|---|

|  |  |

ENVIRONMENT ART

Role: 2D&3D Interaction Designer in a team of 5

Length: 2 weeks Platform: CAVE + Makey Makey

UP NEXT